On any MPLS cloud, current deployment is in a way that

Unicast traffic were label switched while Multicast traffic were not. Current deployment requires Multicast to be

enabled on SP core in order to provide Multicast service to SP Customers. In

case of MVPN, Multicast traffic from VPN customer will be encapsulated using

GRE with destination as multicast address within SP cloud and will be flooded

downstream to other PE devices.

Recent enhancement allows providing Multicast service to

end customers without enabling Multicast on SP core. This is done by using

Multipoint LSP. Below are the 2

protocols that can be used to build Multipoint LSP:

1.

Multicast LDP (mLDP)

2.

Point to Multipoint Traffic Engineering Tunnel

(P2MP TE)

There are 2 types of Multipoint LSPs as below:

1.

Point to Multipoint LSP

2.

Multipoint to Multipoint LSP

Point to

Multipoint LSP

Point to Multipoint LSP is a unidirectional LSP with one

Ingress LSR and one or more Egress LSR and labeled data replication happening

on Branch LSR. P2MP LSP can be established by both mLDP as well P2MP TE. This

can be used in application like IPTV video distribution.

Multipoint to

Multipoint LSP

This is bidirectional LSP which is currently supported by

mLDP only. This can be used in application like Video conference which required

bidirectional communication. MP2MP LSP can be used as P-Tunnel to provide Multicast

support over BGP/MPLS IP VPN .

In this document, we will discuss about basics of mLDP

and the related configuration to enable P2MP and MP2MP LSP using mLDP.

Terminology

|

Description

|

P2MP LSP

|

LSP with one ingress LSR and more than one egress LSR.

|

MP2MP LSP

|

LSP that connects a set of nodes in a way that traffic

sent by node will be delivered to all other nodes.

|

Root Node

|

This plays an important role with mLDP. Any LSR will

establish the LSP towards Root node.

|

Ingress LSR

|

LSR that sends data packet into an LSP.

|

Egress LSR

|

LSR that removes data packet from LSP for further

processing.

|

Transit/Midpoint LSR

|

LSR that replicates the label packet from upstream to

one or more downstream LSRs.

|

Bud LSR

|

LSR that have directly connected receivers ( acts as

egress LSR) as well have one or more downstream LSR (replicates packet to

downstream LSR)

|

Leaf Node

|

Any LSR with receivers are termed as Leaf node. It can

be either an Egress or Bud LSR.

|

mLDP overview:

mLDP also known as Multipoint LDP is an extension of LDP

protocol that helps establish P2MP and MP2MP LSP in the network. With mLDP, LSP

establishment is receiver driven that egress LSR will create LSP whenever there

is an interesting receiver. On receiving

interested receiver, mLDP will create the LSP towards Root node. The Root

address will be derived either from BGP next hop of the source or through

static configuration.

Support for establishing multipoint LSP should be

negotiated as part of Session Initialization message. LDP capabilities have

been enhanced with new TLV that will be advertised in the LDP Initialization

message to negotiate P2MP (0x0508) and MP2MP (0x0509) support capabilities.

As like

LDP, mLDP Label Mapping Message carries FEC TLV and Label TLV. FEC elements

which will be carried within FEC TLV specify the set of packet/traffic to be

mapped to that specific LSP. mLDP uses three different type of FEC element to

build MP-LSP as below,

Ø

P2MP FEC Element

Ø

MP2MP Upstream FEC Element

Ø

MP2MP Downstream FEC Element

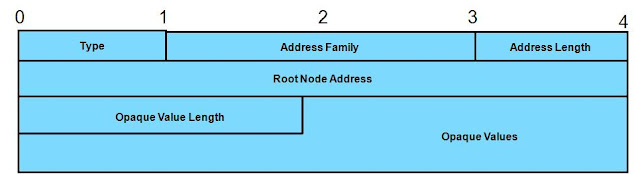

Below is the FEC Encoding,

Parameter

|

Description

|

FEC

Type

|

Type

of FEC element. P2MP (0x06); MP2MP Upstream (0x07); MP2MP Downstream (0x08)

|

Address

Family

|

Root

Node Address Type. IPv4 or IPv6

|

Address

Length

|

Root

Node Address Length. IPv4 (32 bits); IPv6 (128 bits)

|

Root

Node Address

|

Root

Node

|

Opaque

Value length

|

Length

of Opaque value in octets

|

Opaque

Values

|

One

or more Opaque values which will be used to identify the MP LSP.

|

Root Node Address

This

field carries address of the Root Node towards which the Multipoint LSP will be

established.

Opaque Value

Opaque

value plays a key role in differentiating MP LSP in the context of Root Node. Opaque value is TLV based and the value may

vary based on the application for which MP LSP is established. For example, it

might be (S,G) for PIM SSM transit while will be MDT default value in case of

MVPN.

Below

is the Opaque TLV format,

Opaque Value

|

Description

|

Type

= 00

|

Manual

or Static provisioned MP LSP

|

Type

= 01

|

Dynamically

provided MP LSP for BGP MVPN (Auto discovery)

|

Type

= 02

|

Statically

provisioned MP LSP for MVPN.

|

P2MP LSP Establishment

1.

Any Leaf node interested in receiving the

application stream over P2MP LSP will identify the corresponding Root Node and

generate FEC Element.

2.

The Leaf node will now identify the

UPSTREAM router to reach the Root Node (based on RIB entry) and will send Label

Mapping for the FEC value.

3.

Any Transit router on receiving P2MP Label

Mapping message from DOWNSTREAM router will confirm that the advertising router

is not the UPSTREAM for the associated ROOT. Once confirmed, it will check for

any existing state entry for the FEC element

a. If it already has the state entry, advertised

label details will be updated in the table for replication.

b. If it doesn’t have an entry, it will create

one and will update the advertised label in table for replication. It now will

allocate a local label for the FEC and will advertise to UPSTREAM router

towards ROOT.

4.

ROOT node on receiving P2MP label mapping

from DOWNSTREAM router will create a state entry if it doesn’t have one and

will use the LSP to sent desired traffic.

MP2MP LSP Establishment

As

we saw earlier, MP2MP LSP is a bidirectional LSP which needs to be signaled

both upstream and downstream direction. MP2MP LSP upstream path uses MP2MP

UPSTREAM FEC and is ORDERED mode while MP2MP LSP downstream path uses MP2MP DOWNSTREAM

FEC.

1.

Any interesting Leaf node will allocate a

local label for MP2MP DOWNSTREAM FEC and

advertise the same via Label mapping message to upstream LSR. This LSR will also expect MP2MP UPSTREAM label

mapping from upstream LSR for FEC .

2.

Any Transit LSR on receiving label mapping

for MP2MP downstream FEC will confirm that it is not received from UPSTREAM LSR

for the associated ROOT. Once confirmed, it will check for any existing state

entry for the FEC element

a. If

it already has the state entry, advertised label details will be updated in the

table for replication. It now will check if it has received the UPSTREAM label

mapping for the FEC value and if so, will advertise the local upstream label to

downstream LSR.

b. If

it doesn’t have the state entry, it will create one and will advertise the

local downstream label to UPSTREAM LSR towards ROOT. It also now will wait for

a UPSTREAM label for the FEC from UPSTREAM LSR before advertising local

upstream label to DOWNSTREAM LSR.

3.

ROOT node on receiving MP2MP downstream label

mapping from DOWNSTREAM LSR will create a state entry if it doesn’t have one

and generate a local upstream label and advertise the same to DOWNSTREAM LSR.

4.

DOWNSTREAM LSR on receiving the upstream

label mapping will generate a local upstream label for the FEC and will

advertise towards DOWNSTREAM LSR.

Root Node Redundancy

It

can be observed that Root Node plays a key role in MP LSP establishment. P2MP LSP

and MP2MP downstream LSP will be established towards ROOT while MP2MP upstream

LSP will be established from ROOT. So Root node redundancy is a major factor to

be addressed as part of network resiliency.

For

redundancy, MP LSP will be established to 2 different ROOT node. Any Leaf can

receive traffic from any of the ROOT nodes.

In

case of P2MP LSP, traffic for a particular FEC should be sent only by one ROOT

to avoid packet duplication and inefficient bandwidth utilization.

In

case of MP2MP LSP, any leaf node should send the traffic to only one ROOT while

can received traffic from any ROOT. This again is to avoid packet duplication

and inefficient bandwidth utilization.

Courtesy:

http://tools.ietf.org/html/draft-ietf-mpls-ldp-p2mp-15

http://tools.ietf.org/html/draft-bishnoi-mpls-mldp-opaque-types-01